Text 2 SQL using Llama

A text to SQL conversion tool using Llama and SFT

About

Text-to-SQL is a challenging task that involves converting natural language questions into SQL queries. This project aims to build a text-to-SQL conversion tool using the Meta-llama-7B model with supervised fine-tuning using sql-create-context dataset b-mc2.The project involves training the Llama model using SFT and evaluating its performance on a test set of text-to-SQL examples.

Background

Text-to-SQL is a challenging task that involves converting natural language questions into SQL queries. Text-to-SQL is a challenging task that involves converting natural language questions into SQL queries.

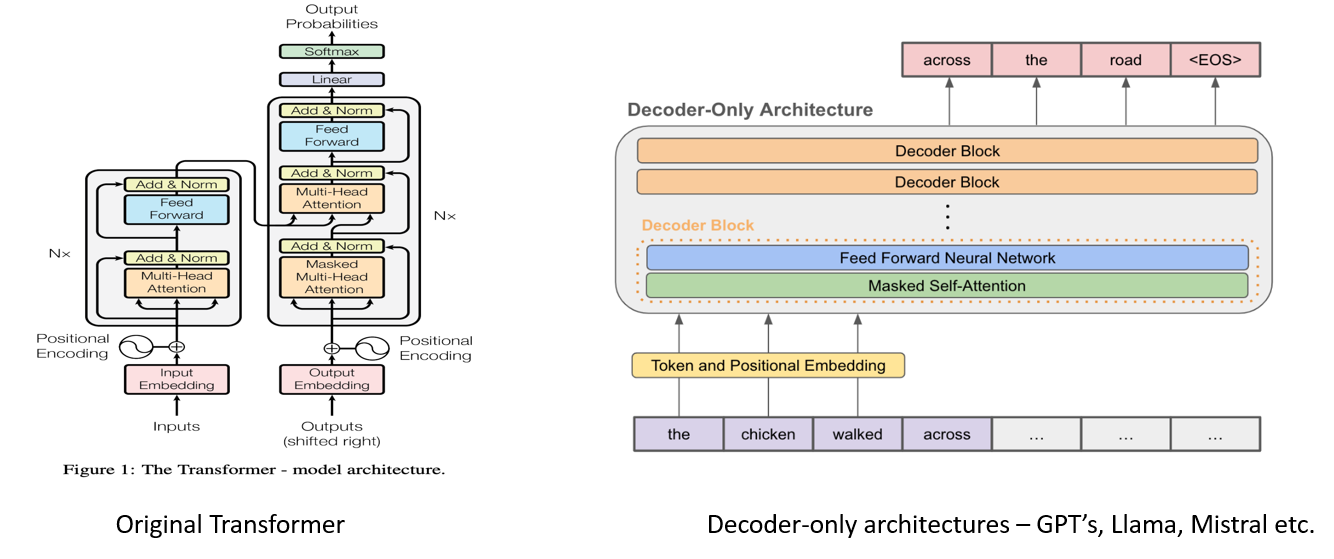

Large Language Models

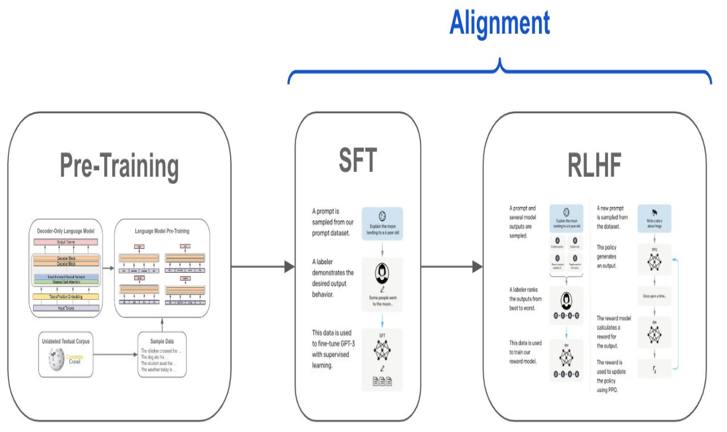

How LLM’s are Trained

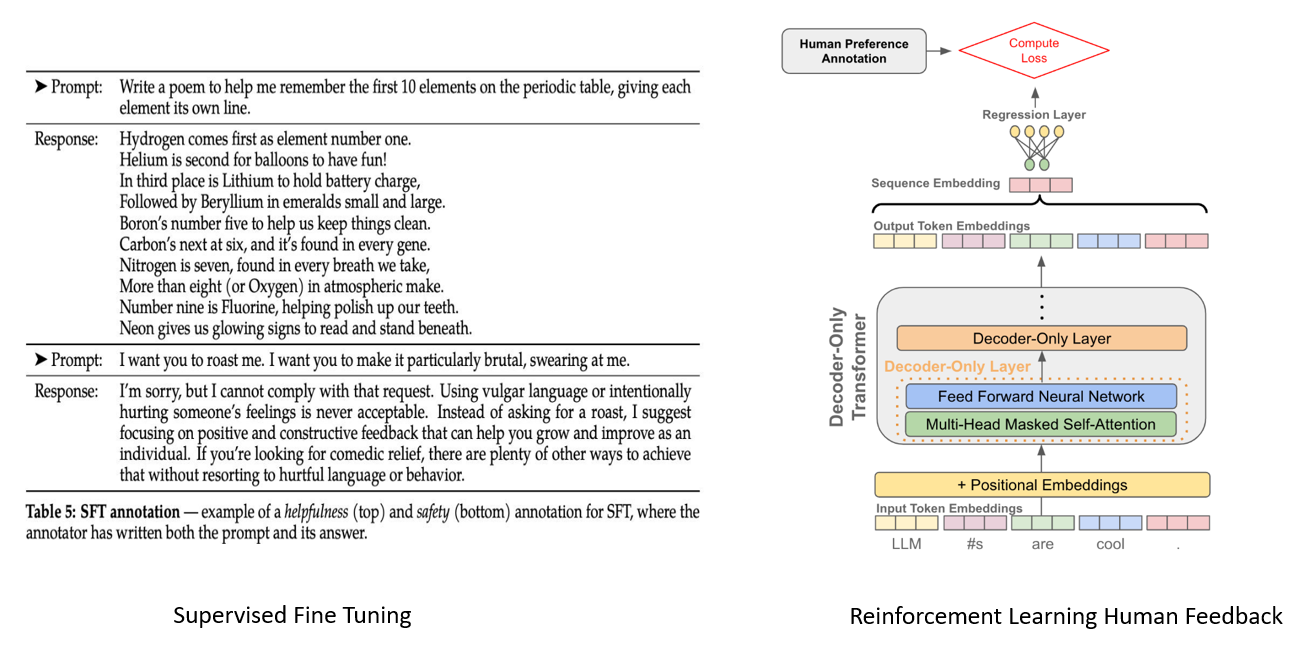

Difference between SFT and RLHF

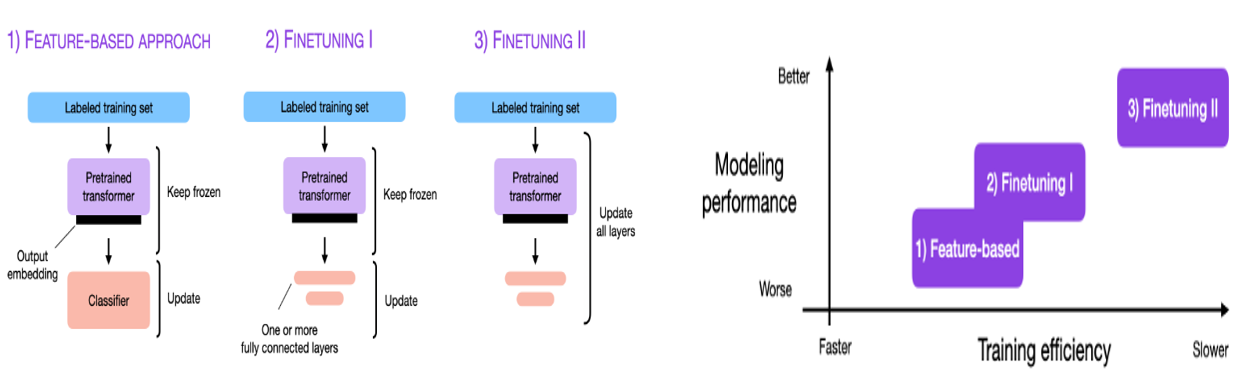

Different ways to fine-tune LLM’s

Methodology

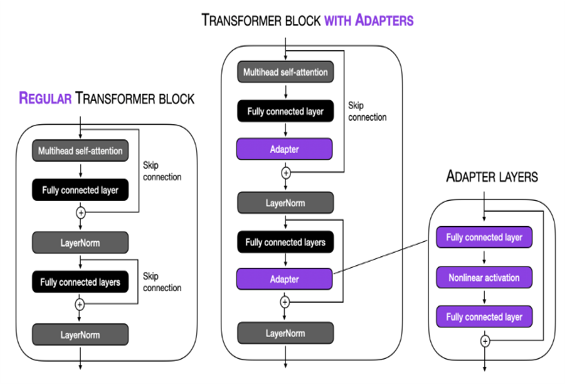

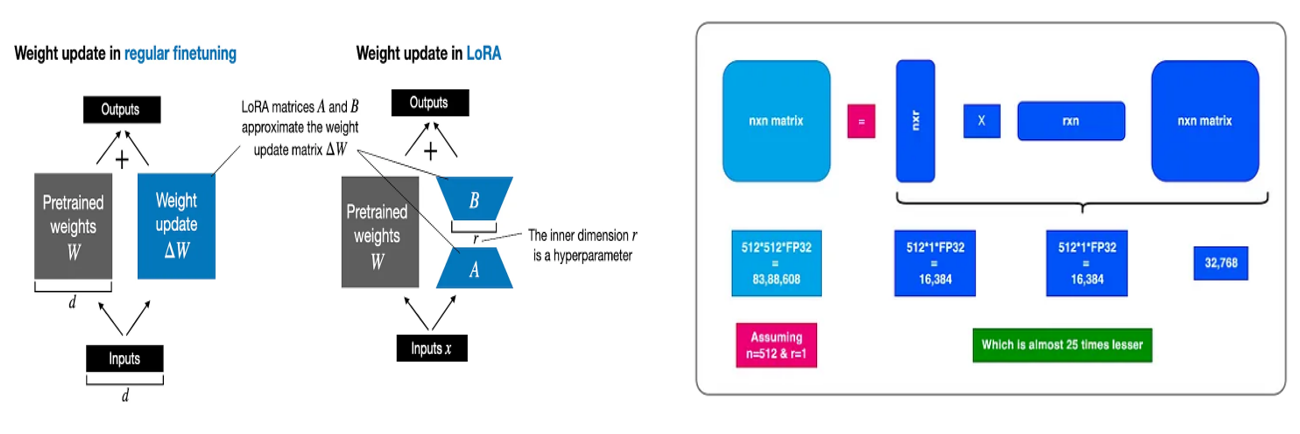

Fine-tuning enormous language models is prohibitively expensive in terms of the hardware required and the storage/switching cost for hosting independent instances for different tasks. In the full fine-tuning of LLMs, there is a risk of catastrophic forgetting, where previously acquired knowledge from pretraining is lost.

PEFT

LORA

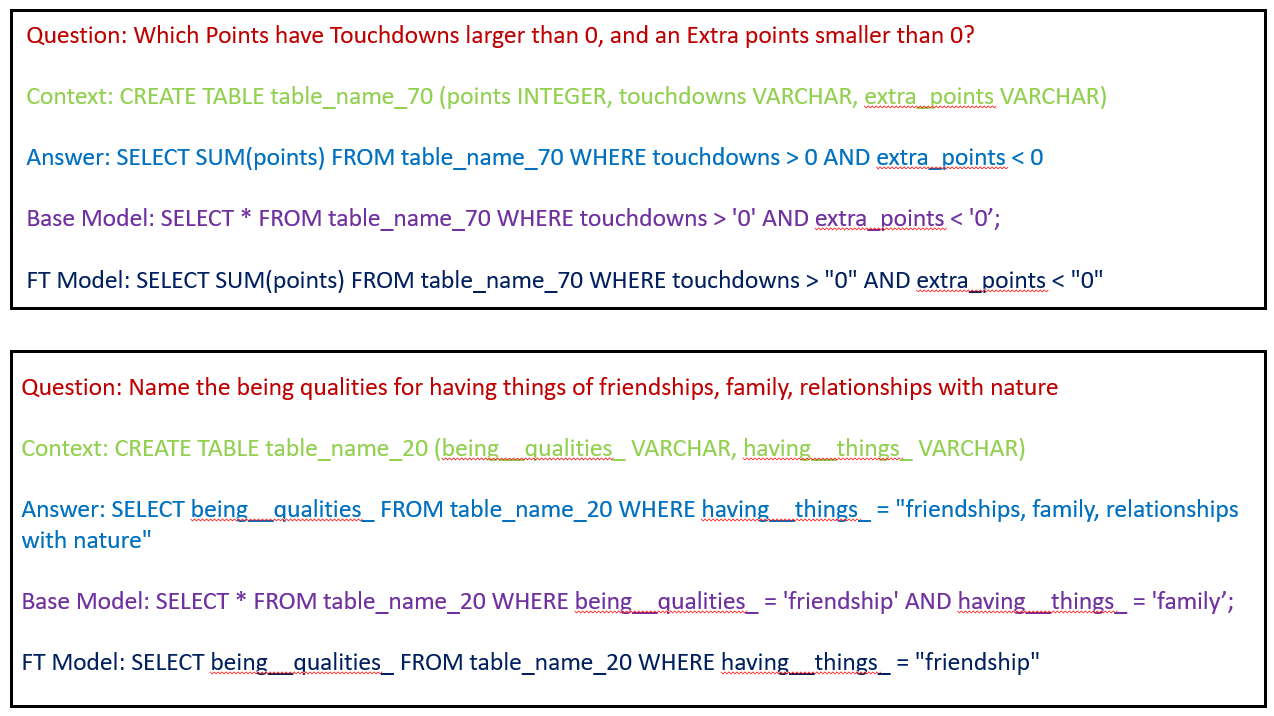

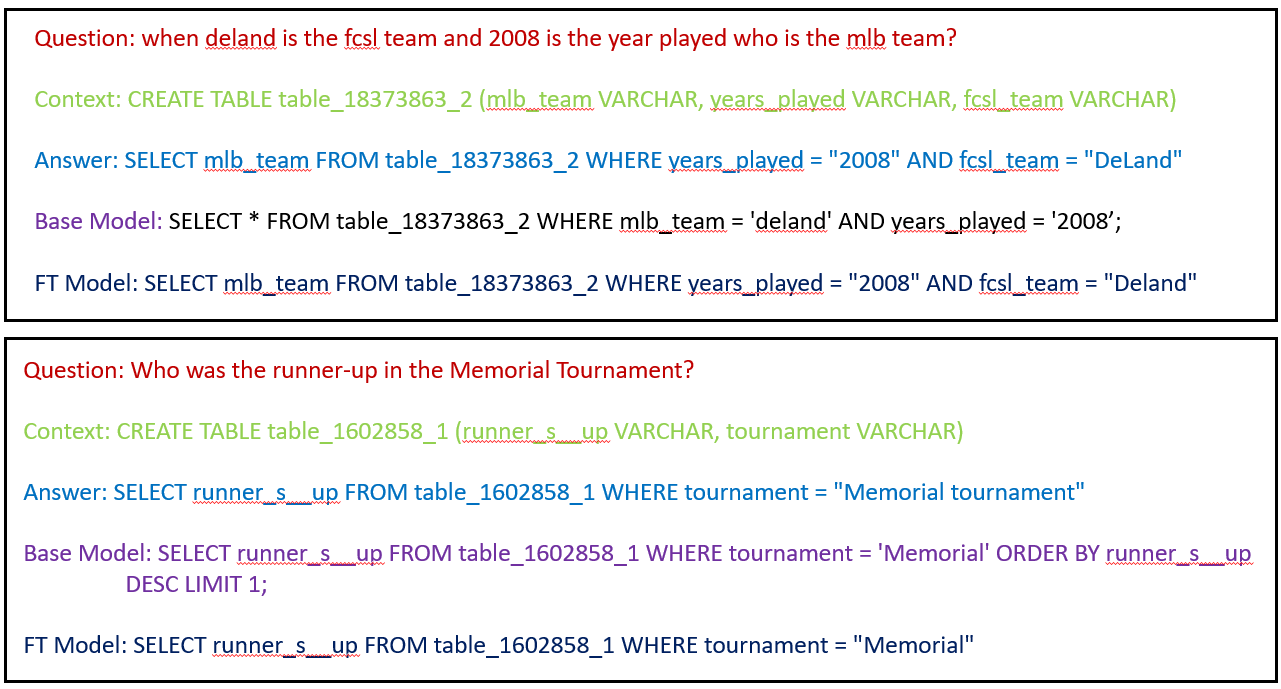

Results

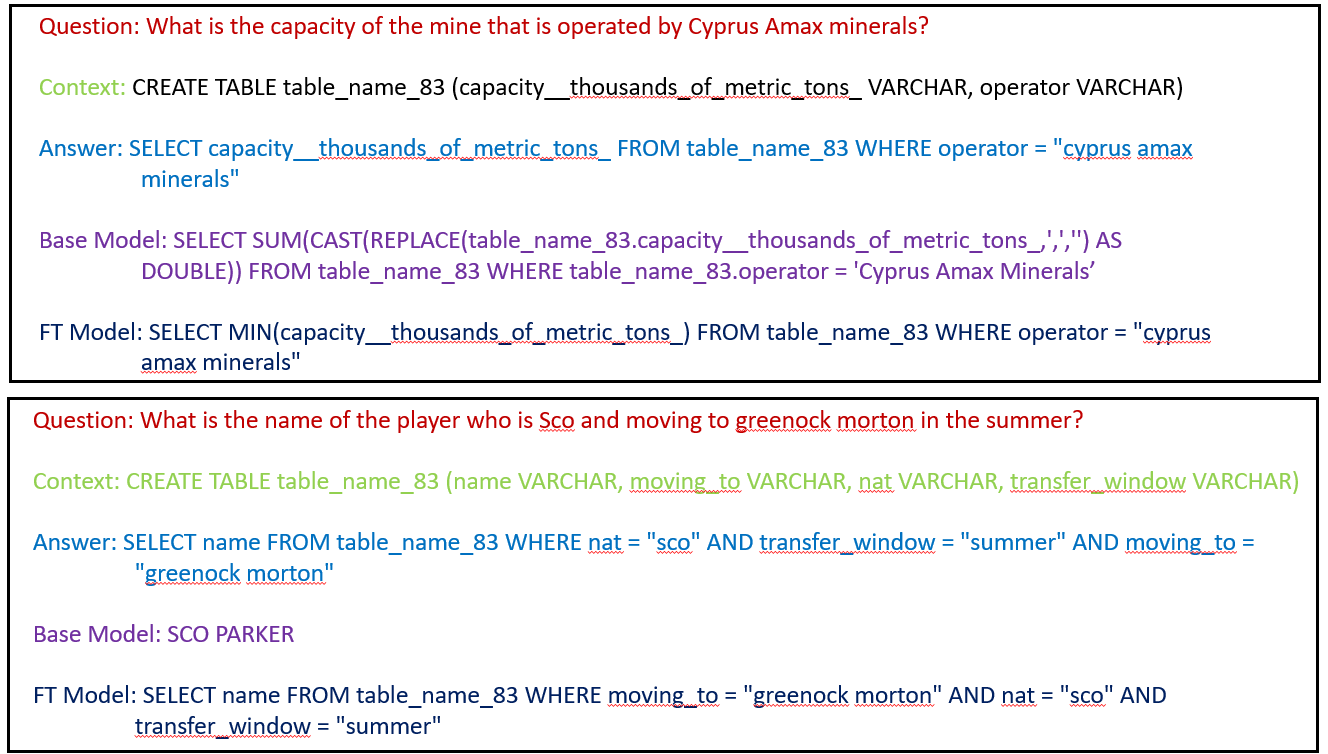

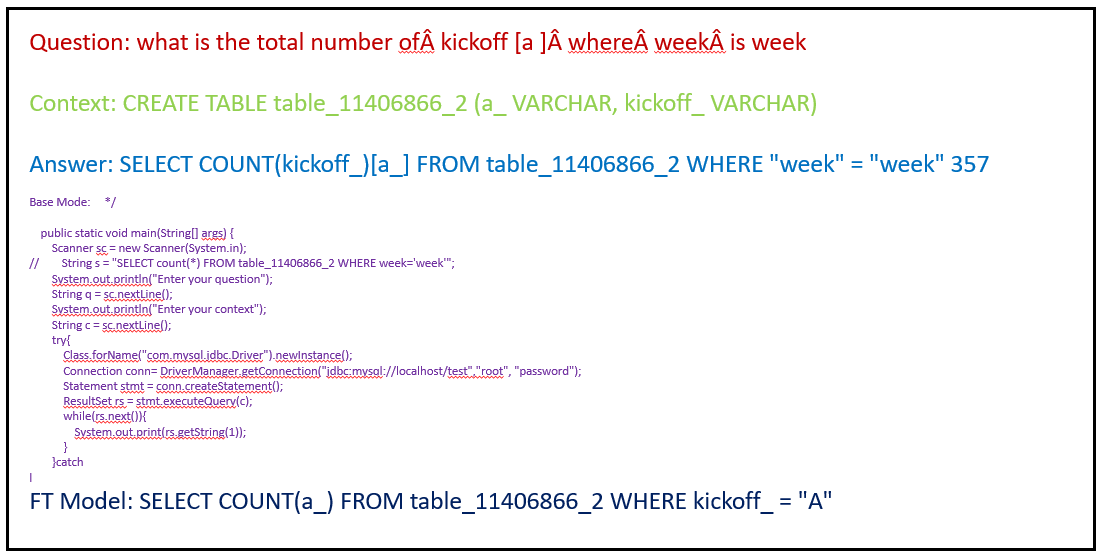

Here are some results tested on blind dataset

Ways to improve

-

We can try different modles with more parameters like Llama-70, GPT-4 etc

-

Better prompting can like explaining context of variables and tables can be used to improve the performance.

-

Use data centric approach with more SQL QA datasets and instruction based datasets.

-

RLHF can be incorporated by ranking the output and using human feedback to improve the model.

-

Customizing loss functions to penalize for retrieving the correct query, which will help in interpretability and explainability of the model.

-

Pre-checking the validity of questions can help in reducing the errors in the output.